“Before you become too entranced with gorgeous gadgets and mesmerizing video displays, let me remind you that information is not knowledge, knowledge is not wisdom, and wisdom is not foresight. Each grows out of the other, and we need them all.” – Arthur C. Clarke

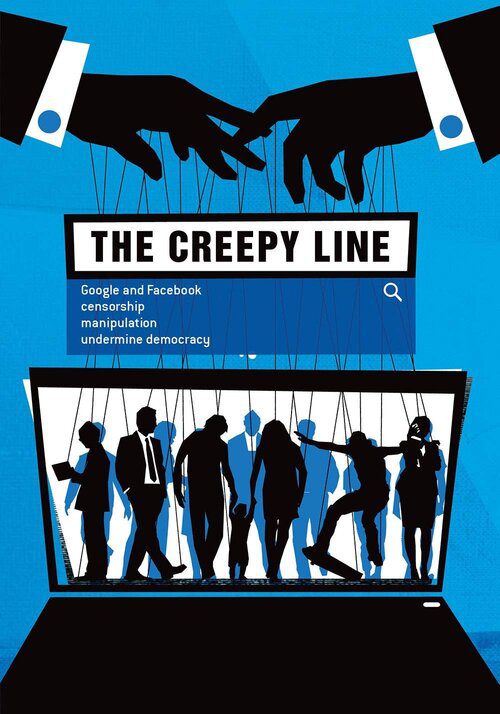

The Creepy Line is a 2018 American documentary exploring the influence Google and Facebook have on public opinion, and the power the companies have that is not regulated or controlled by national government legislation.

There is what I call the creepy line. The Google policy on a lot of things is to get right up to the creepy line and not cross it. I would argue that implanting things in your brain is beyond the creepy line. – Eric Schmidt

This feature documentary reveals the stunning degree to which society is manipulated by Google and Facebook, and blows the lid off the remarkably subtle and powerful manner in which they do it. Offering first-hand accounts, scientific experiments and detailed analysis, the film examines what is at risk when these tech titans have free reign to utilize the public’s most private and personal data.

The Promise

At the end of the 20th century, Silicon Valley industry titans like Steve Jobs and Bill Gates made a promise that would forever alter how we perceive the world.

It spans the globe like a superhighway. It is called the “Internet”. Suddenly, you’re part of a new mesh of people, programs, archives, ideas. The personal computer and the Internet ushered humanity into the information age, laying the groundwork for unlimited innovation.

At the dawn of the 21st century, there would be a new revolution. A new generation of young geniuses made a new promise beyond our wildest dreams.

Sergey Brin (Google): The idea is that we take all the world’s information and make it accessible

and useful to everyone.

Limitless information, artificial intelligence, machines that would know what we wanted and would tend to our every need. The technology would be beyond our imaginations, almost like magic, yet the concept would be as simple as a single word: search.

Peter Schweizer – NYT Best Selling Author

What I think is so interesting about Google and Facebook is they were founded at American universities. At Stanford University, you had two students, Larry Page, and Sergey Brin, decide they wanted to create the ultimate search engine for the Internet.

Across the country, you had in the case of Facebook, Mark Zuckerberg, who was a student at Harvard, decided he wanted to create a social media platform, basically to meet girls and to make

friends, and hence we got Facebook.

Sergey Brin (Google): “I think that a company like Google, we have the potential to make very big differences, very big positive differences in the world and I think we also have an obligation as a consequence of that.”

Google handles 65% of all Internet search in the US and even more overseas.

Facebook and Google was started with a great idea and great ideals. Unfortunately, there was

also a very dark side.

Search Yourself

Dr. Robert Epstein – Senior Research Psychologist, American Institute for Behavioral Research and Technology

In the early days, Google was just a search engine. All the search engine was initially was an index to what’s on the Internet. The Internet was pretty small back then. They had a better index than other indexes that were around; theirs was not the first search engine.

Luther Lowe – Senior Vice President , Yelp

Page famously said that the point of Google is to get people on to Google and off of Google out into the open web as quickly as possible, and largely, they were really successful about that. The success of Google led to, in many ways the success of the open web and this explosive growth in start-ups and innovation during that period. Google had developed this incredible technology called PageRank, unveiling what was known as the BackRub algorithm, and it was just leaps and bounds ahead of services like Yahoo, AltaVista, and quickly became the clear winner in

the space just from a technology basis.

And what PageRank does, its spiders around the Internet instead of being a directory service like Yahoo was. It’s taking pictures of web pages and analyzing the links between the web pages and using that to make some assumptions about relevance; and so, if you’re looking for some

history of Abraham Lincoln and there’s a bunch of links across the web pointing to a particular site, that must mean that this page is the “most relevant.”

Tracking

Now, they had to figure out: how do we make money off this? We’ve got an index. How do we make money? Well, they figured out how to do that. It’s pretty simple. All you do is track people’s searches. Your search history is very, very informative. That’s gonna tell someone immediately

whether you’re Republican or Democrat, whether you like one breakfast cereal versus another.

That’s gonna tell you whether you’re gay or straight. It’s gonna tell you thousands of

things about a person over time because they can see what websites you’re going to. And where do they go? Did they go to a porn site? Did they go to a shopping site? Did they go to a website looking up how to make bombs?

Peter Schweizer:

This information, essentially, are building blocks, they are constructing a profile of you and that profile is real, it’s detailed, it’s granular and it never goes away.

Targeted Addvertising

This was really the first large-scale mechanism for targeted advertising. For example, I want to sell umbrellas. Well, Google could tell you exactly, you know, who is looking for umbrellas because they have your search history, they know. So, they could hook up the umbrella makers with people searching for umbrellas. Basically send you targeted ads. That is where Google gets more than 90% of its revenue.

Don’t Be Evil

Steve Levy

When the “don’t be evil” slogan came up – it was during a meeting, I think in 2003 if I recall right –

people are trying to say, “Well, what are Google’s values?” and one of the engineers who was invited to this meeting said, “We could just sum it all up by just saying ‘don’t be evil.’”

Peter Schweizer:

What’s so interesting about this term that they were not going to be evil, is they never defined what evil was. They never even gave us a sense of what it meant, but people latched on to it and sort

of adopted it and trusted it because, of course, nobody wants to be associated with an evil company, so people gave the term “evil” their own meaning.

Dr. Jordan B. Peterson – Professor of Psychology, University of Toronto

Even their home page indicates to you that you’re not being manipulated and they sort of broadcast to you the idea that this was a clean, simple, reliable, honest interface, and that

the company that produced it wasn’t overtly nefarious in its operations. So, there were no ads on the front page, for example, which was a big deal. And so, everybody in that initial phase of excitement, of course, was more concerned about exploring the possibilities of the technology than concerned about the potential costs of the technology.

Jaron Lanier – Computer Scientist

It was always kind of a generous thing, “We’re gonna give you these great tools, we’re gonna give you these things that you can use,” and then Google was saying, “No, actually, we’re gonna keep your data and we’re gonna organize society. We know how to improve and optimize the world.”

Luther Lowe

In 2007, there were a couple of trends I think led Google off of its path of being the place that just matched people with the best information on the Web and led them off into the Internet, and that was the birth of smartphones. Steve Jobs unveiled the iPhone, and the rise of Facebook, and this notion that time spent on a site where users were spending lots and lots of time on Facebook, became a really important metrics that advertisers were paying attention to, and so Google’s response was basically to deviate from that original ethos in becoming a place where they were

trying to keep people on Google.

Google Chrome

And so Google’s response was basically to deviate from that original ethos in becoming a place where they were trying to keep people on Google. So, they very rapidly started to branch out into other areas. They were getting a lot of information from people using the search engine, but if people went directly to a website, uh-oh, that’s bad, because now Google doesn’t know that. So, they developed a browser, which is now the most widely used browser in the world: Chrome.

By getting people to use Chrome, they were able now to collect information about every single website you visited, whether or not you were using their search engine, but of course, even that’s not enough, right, because people do a lot of things now on their mobile devices, on their computers. Without using their browser, you want to know what people are doing, even when they’re not online.

Android Mobile Operating System

So, Google developed an operating system, which is called Android, which on mobile devices is the

dominant operating system, and Android records what we’re doing, even when we are not online. As soon as you connect to the Internet, Android uploads to Google a complete history of where you’ve been that day, among many other things. It’s a progression of surveillance that Google has been engaged in since the beginning.

When Google develops another tool for us to use, they’re doing it not to make our lives easier, they’re doing it to get another source of information about us.

Dr. Jordan B. Peterson

These are all free services, but obviously, they’re not, because huge complicated machines aren’t free. Your interaction with them is governed so that it will generate revenue.

And that’s what Google Docs is, and that’s what Google Maps is, and literally now more than a hundred different platforms that Google controls.

Companies that use the surveillance business model the two biggest being Google and Facebook – they don’t sell you anything. They sell you! We are the product.

Peter Schweizer:

Now, what Google will tell you is, “We’re very transparent. There’s a user agreement. Everybody knows that if you’re going to search on Google and we’re going to give you access to this free

information, we need to get paid, so we’re going to take your data.”

The problem is, most people don’t believe or don’t want to believe that that data is going to

be used to manipulate you.

Math is Math, Right?

The commonality between Facebook and Google, even though they’re different platforms, is math. They’re driven by math and the ability to collect data.

Mark Zuckerberg (Interview Video): You sign on, you make a profile about yourself by answering some questions entering in some information, such as your concentration or major at school, contact information about phone numbers, instant messaging, screen names, anything you want to tell. Interests, what books you like, movies, and most importantly, who your friends are.

Peter Schweizer:

The founders of these companies were essentially mathematicians who understood that by collecting this information, you not only could monetize it and sell it to advertisers but you

could actually control and steer people or nudge people in the direction that you wanted them to go.

Barry Schwartz – CEO, Rusty Brick

An algorithm basically comes up with how certain things should react. So, for example, you give it some inputs, be it in the case of Google, you would enter in a query, a search phrase, and then certain math will make decisions on what information should be presented to you. Algorithms are used throughout the whole entire process in everyday life, everything from search engines to how cars drive.

Marlene Jaeckel – Co-Founder, Polyglot Programming

The variables you decide, and when you have a human factor deciding what the variables are, it’s

gonna affect the outcome.

Tristan Harris – Co-Founder/Time Well Spent (TED Talk)

I want you to imagine walking into a room, a control room with a bunch of people, a hundred people hunched over at desks with little dials, and that that control room will shape the thoughts and feelings of a billion people. This might sound like science fiction, but this actually exists right now today.

I used to be in one of those control rooms. I was a design ethicist at Google, where I studied “How do you ethically steer people’s thoughts?” Because what we don’t talk about is a handful of people working at a handful of technology companies, through their choices, will steer what a billion

people are thinking today.

Dr. Jordan B. Peterson

Our ethical presuppositions are built into the software, but our unethical presuppositions are built

into the software as well, so whatever it is that motivates Google are going to be built right into the

automated processes that sift our data. What Google is essentially is a gigantic compression algorithm, and what a compression algorithm does is look at a very complex environment and simplifies it, and that’s what you want when you put a term into a search engine, you want all the possibilities sifted so that you find the one that you can take action on essentially.

And so Google simplifies the world and presents the simplification to you, which is also what your perceptual systems do, and so basically we’re building a giant perceptual machine that’s an intermediary between us and the complex world. The problem with that is that whatever the

assumptions are that Google operates under are going to be the filters that determine how the world is simplified and presented.

Bias

The algorithm that they use to show us search results, it was written for the precise purpose of doing two things, which make it inherently biased, and those two things are (1) filtering, that is to say, the algorithm has to look at all the different web pages it has in its index, and it’s got billions of them, and it’s got to make a selection.

Let’s say I type in, “What’s the best dog food?” It has to look up all the dog foods it has in its database and all the websites associated with those dog foods that’s the filtering, but then it’s got to put them into an order, and that’s the ordering. So, the filtering is biased, because why did it pick those websites instead of the other 14 billion? And then the ordering is biased, because why did it put Purina first and some other company second? It would be useless for us unless it was biased.

That’s precisely what we want. We want it to be biased. So, the problem comes in, obviously,

when we’re not talking about dog foods, but when we’re talking about things like elections.

Because if we’re asking questions about issues like immigration or about candidates, well, again, that algorithm is gonna do those two things. It’s gonna do the filtering, so it’s gonna do some selecting from among billions of web pages, and then it’s gonna put them into an order. It will always favor one dog food over another, one online music service over another, one comparative shopping service over another, and one candidate over another.

Rajan Patel – Search Scientist (Interview)

The Google search algorithm is made up of several hundred signals that we try to put together to serve the best results for each user.

Amit Singhal – Google Fellow (Interview)

Just last year, we launched over 500 changes to our algorithm, so by some count we change our algorithm almost every day, almost twice over.

Dr. Jordan B. Peterson:

Now it’s possible that they’re using unbiased algorithms, but they’re not using unbiased algorithms to do things like search for unacceptable content on Twitter and on YouTube, and on Facebook. Those aren’t unbiased at all. They’re built specifically to filter out whatever’s bad.

Jaron Lanier (Panel Discussion):

What happens is the best technical people with the biggest computers with the best bandwidth to those computers become more empowered than the others, and a great example of that

is a company like Google, which for the most part is just scraping the same Internet and the same data any of us can access and yet is able to build this huge business of directing what links we see in front of us, which is an incredible influence on the world, by having the bigger computers,

the better scientists, and more access.

Sundar Pichai – CEO, Google (Interview)

As a digital platform, we feel like we are on the cutting edge of the issues which society is grappling

with at any given time. Where do you draw the line of freedom of speech and so on?

Dr. Jordan B. Peterson:

The question precisely is, “What’s good and bad, according to whose judgment?” And that’s especially relevant given that these systems serve a filtering purpose. They’re denying or allowing us access to information, before we see and think, so we don’t even know what’s

going on behind the scenes.

The people who are designing these systems are building a gigantic unconscious mind that will filter the world for us and its increasingly going to be tuned to our desires in a manner that

will benefit the purposes of the people who are building the machines.

The Bubble

Peter Schweizer

What these algorithms basically do is they create bubbles for ourselves, because the algorithm learns, “This is what Peter Schweizer is interested in,” and what does it give me when I go online? It gives me more of myself.

Franklin Foer – Journalist/The Atlantic (Interview)

I mean, it’s narrowing your vision in some ways. It’s funneling my vision; it’s leading me to a view of the world. And it may not be Facebook’s view of the world, but it’s the view of the world that will make Facebook the most money. They’re attempting to addict us and they’re addicting us on the basis of data.

Dr. Jordan B. Peterson:

The problem with that is that you only see what you already know, and that’s not a good thing, because you also need to see what you don’t know, because otherwise, you can’t learn.

Peter Schweizer

And it creates a terrible dynamic when it comes to the consumption and information in the news because it leads us to only see the world in a very, very small and narrow way. It makes us easier to control and easier to be manipulated.

What is your Source….Code?

Peter Schweizer:

I think what’s so troubling about Google and Facebook is you don’t know what you don’t know, and while the Internet provides a lot of openness, in the sense that you have access to all this information, the fact of the matter is you have basically two gatekeepers: Facebook and Google, which control what we have access to.

Jim Roberts – Executive Editor and Chief Content Officer – Mashable

News organizations have to be prepared that their home pages are less important.People are using… They’re using Facebook as a homepage; they’re using Twitter as their own homepage. Those news streams are their portals into the world of information.

Studies show now that individuals expect the news to find them.

Fake News

Peter Schweizer:

Well, fake news is not even really a description of anything anymore. It’s a weapon, it gets tossed around. If there’s a real news story that somebody doesn’t like, they just dismiss it as fake news. It’s kind of a way of trying to get it to go away and it’s being misused. It’s being used as a way to dismiss actual factual evidence that something has happened.

Dr. Robert Epstein

There are three reasons why we should not worry about fake news stories.

Fake news is competitive. You can place your fake news stories about me and I can place my fake news stories about you; it’s completely competitive. There have always been fake news stories. There have always been fake TV commercials and fake billboards. That has always existed and always will.

Second, is that you can see them. They’re visible; you can actually see them with your eyeballs. Because you can see them, that means you can weigh in on them. You also know that there’s a

human element, because usually, there’s a name of an author on the fake news stories. You know, you can decide just to ignore them.

Generally speaking, most news stories, like most advertisements, are not actually read by anyone, so you put them in front of someone’s eyeballs, and people just kind of skip right over them.

The third reason why fake news stories are not really an issue is because of something called confirmation bias. We pay attention to and actually believe things

Confirmation bias is the tendency to search for, interpret, favor, and recall information in a way that confirms or supports one’s prior beliefs or values.

So, you don’t really change people’s opinions with fake news stories, you don’t. You support beliefs that people already have.

The real problem is these new forms influence that (1) are not competitive; (2) people can’t see and (3) are not subject to confirmation bias. If you can’t see something, confirmation bias can’t kick in, so we’re in a world right now in which our opinions, beliefs, attitudes, voting preferences, purchases are all being pushed one way or another every single day by forces that we cannot see.

Fake news is the shiny object they want us to chase that really isn’t relevant to the problems we’re facing today.

Marlene Jaeckel:

We use Google so extensively; the average person does, you know, multiple queries per day,

and some more than others, and over time, you don’t realize that it’s impacting you. And we already know that people are not necessarily fact-checking on their own; they rely on media companies,

they rely on mainstream media that rely on companies like Google to give them answers about the world without necessarily looking at, “Is that true?”

Facebook by the numbers: contains 2 trillion posts, 1.6 billion users, and 1.5 billion searches per day.

What your Facebook friends post can have a direct effect on your mood. New research shows the

more negative posts you see, the more negative you could become.

Phil Kerpin – President, American Commitment

Section 230 of the Communications Decency Act

We really, I think, should be concerned when these companies that represent themselves as neutral platforms are actually exercising editorial control and pushing particular political viewpoints,

because they’re enjoying a special protected legal status on the theory that they’re providing a neutral platform for people to speak, to have a diversity of views and so forth; and that status is based on section 230 of the Communications Decency Act, which protects them from legal liability, and so this is the magic sauce from a legal standpoint that makes these

large platform companies possible, is they’re not legally responsible for the things that people say on their sites.

“No provider or user of an interactive computer service shall be treated as the publisher or speaker of any information provided by another information content provider” (47 U.S.C. § 230).

It’s the people who say the things on their site who bear legal responsibility. If they had to be legally responsible for everything that everyone says on their sites, their business model would break down. It wouldn’t work very well. But I think that there’s something wrong when you use the legal liability that you were given to be a neutral platform at the same time you’re exercising very aggressive editorial control to essentially pick sides politically.

The Creepy Line

Dr. Jordan B. Peterson:

Well, it’s an interesting word, “creepy”, right? Because it’s a word that connotes horror. He didn’t say, “Dangerous,” he didn’t say, “Unethical.” There are all sorts of words that could have fit in that slot. He said, “Creepy.” And a creep is someone who creeps around and follows you and spies on you for unsavory purposes, right? That’s the definition of a creep.

You know, I don’t think the typical ethical person says, “I’m going to push right up to the line of creepy and stop there.” You know, they say more something like, “How about we don’t get near enough to the creepy line so that we’re ever engaging in even pseudo-creepy behavior?”

Because creepy is really bad, you know, it’s… A creepy mugger is worse than a mugger. The mugger wants your money. God only knows what the creepy mugger wants; it’s more than your money.

Search Engine Manipulation Effect

For Google alone, we’re studying three very powerful ways in which they’re impacting people’s opinions. This phenomenon that we discovered a few years ago, “SEME”: search engine manipulation effect, is a list effect.

List effect is an effect that a list has on some aspects of our cognitive functioning. What’s higher in a list is easier to remember, for example. SEME is an example of a list effect but with a difference, because it’s the only list effect I’m aware of that is supported by a daily regimen of operant conditioning that tells us that what’s at the top of search results is better and truer than

what’s lower in the list. It’s gotten to a point that fewer than 5% of people click beyond page one, because the stuff that’s on page one and typically at the top in that sort of above-the-fold

position is the best. Most of the searches that we conduct are just simple routine searches.

What is the capital of Kansas? And on those simple searches over and over again, the correct answer arises right at the top. So, we are learning over and over and over again, and then the day comes when we look something up that we’re really unsure about, like, where should I go on vacation? Which car should I buy? Which candidate should I vote for? And we do our little search

and there are our results, and what do we know? What’s at the top is better. What’s at the top is truer.

That is why it’s so easy to use search results to shift people’s opinions. This kind of phenomenon, this is really scary compared to something like fake news because it’s invisible. There’s a manipulation occurring. They can’t see it. It’s not competitive because, well, for one thing,

Google for all intents and purposes has no competitors. Google controls 90% of search in most of the world. Google is only going to show you one list, in one order, which have a dramatic impact on the decisions people make, on people’s opinions, beliefs and so on.

The second thing they do is what you might call the autofill in. If you are searching for a political

candidate, Congressman John Smith, you type in Congressman John Smith and they will give you several options that will be a prompt as it were as to what you might be looking for. If you have four positive search suggestions for a candidate, well, guess what happens? SEME happens.

People whose opinions can be shifted shift because people are likely to click on one of those suggestions that’s gonna bring them search results, obviously, that favor that candidate. That is going to connect them to web pages that favor that candidate, but now we know that if you allow just one negative to appear in that list, it wipes out the shift completely, because of a phenomenon called negativity bias.

Negativity Bias is the notion that, even when of equal intensity, things of a more negative nature (e.g. unpleasant thoughts, emotions, or social interactions; harmful/traumatic events) have a greater effect on one’s psychological state and processes than neutral or positive things

One negative, and in some demographic groups draw 10 to 15 times as many clicks as a neutral item in the same list. Turns out that Google is manipulating your opinions from the very first character that you type into the search bar.

So, this is an incredibly powerful and simple means of manipulation. If you are a search engine company, and you want to support one candidate or one cause or one product or one company, just suppress negatives in your search suggestion. All kinds of people over time will shift their opinions in that direction where you want them to shift, but you don’t suppress negatives for the other candidate, the other cause, the other product.

And then third, very often Google gives you a box, so they will give you search results, but they’re below the box and up above is a box and the box just gives you the answer. When it comes to local search, which it turns out is the most common thing people do on Google, 40% of all searches, it’s not so clear. If I’m doing a search for a pediatrician in Scranton, Pennsylvania, what happens is Google still bisects the page, shoves the organic meritocracy based information far down the page

and plops its answer box up at the top, but it’s populating that box with its own, sort of, restricted set of information that it’s, kind of, drawing from its own kind of proprietary sandbox.

These are just Google’s reviews that it’s attempted to collect over the years. Users are habituated to assume the stuff at the top is the best, but instead, it’s just what Google wants them to see, and it looks the same, and what happens is Google’s basically able to put its hand on the scale and create this direct consumer harm, because that mom searching for the pediatrician in Scranton is not finding the highest-rated pediatrician according to Google’s own algorithm.

She’s just getting served the Google thing, no matter what, and that, I think, is leading to, you know,

terrible outcomes in the offline world. So, Google has at its disposal on the search engine itself, at least three different ways of impacting your opinion, and it is using them. We’re talking about a single company having the power to shift the opinions of literally billions of people, without anyone having the slightest idea that they’re doing so.

All the Best in your quest to get Better. Don’t Settle: Live with Passion.

Comments are closed.